Protecting apps on Fly with Arcjet

Fly automatically builds and deploys your containerized app globally, but you still need to handle the application security.

Writing code is not enough - you’ve also got to deploy it! That means thinking about securing your deployments. This post shares some tactics for how developers can secure their container deployments.

Writing code is not enough - you’ve also got to deploy it! This means considering all the security touchpoints from writing code through to deploying and running it in production. This is where your application is most vulnerable, and good security is all about layers. And let’s be honest, as developers we often only think about the code.

Nothing is 100% secure so if (when) one layer gets breached, you want to have several others standing in the way. There are plenty of security tools to help as you write code, but what about when it's running?

In this post I’ll share some of the tactics we use at Arcjet to help us deploy with more confidence and less risk. You can only go fast if you have good guardrails!

There’s a reason why keeping your dependencies up to date is the first item on our Next.js security checklist! Supply chain vulnerabilities are real and running outdated software is an easy way to invite trouble. Not only that, but if there is a big security update that you need to apply, being on the latest versions makes patching far smoother. There’s nothing worse than applying major version updates under the stress of an actively exploited vulnerability.

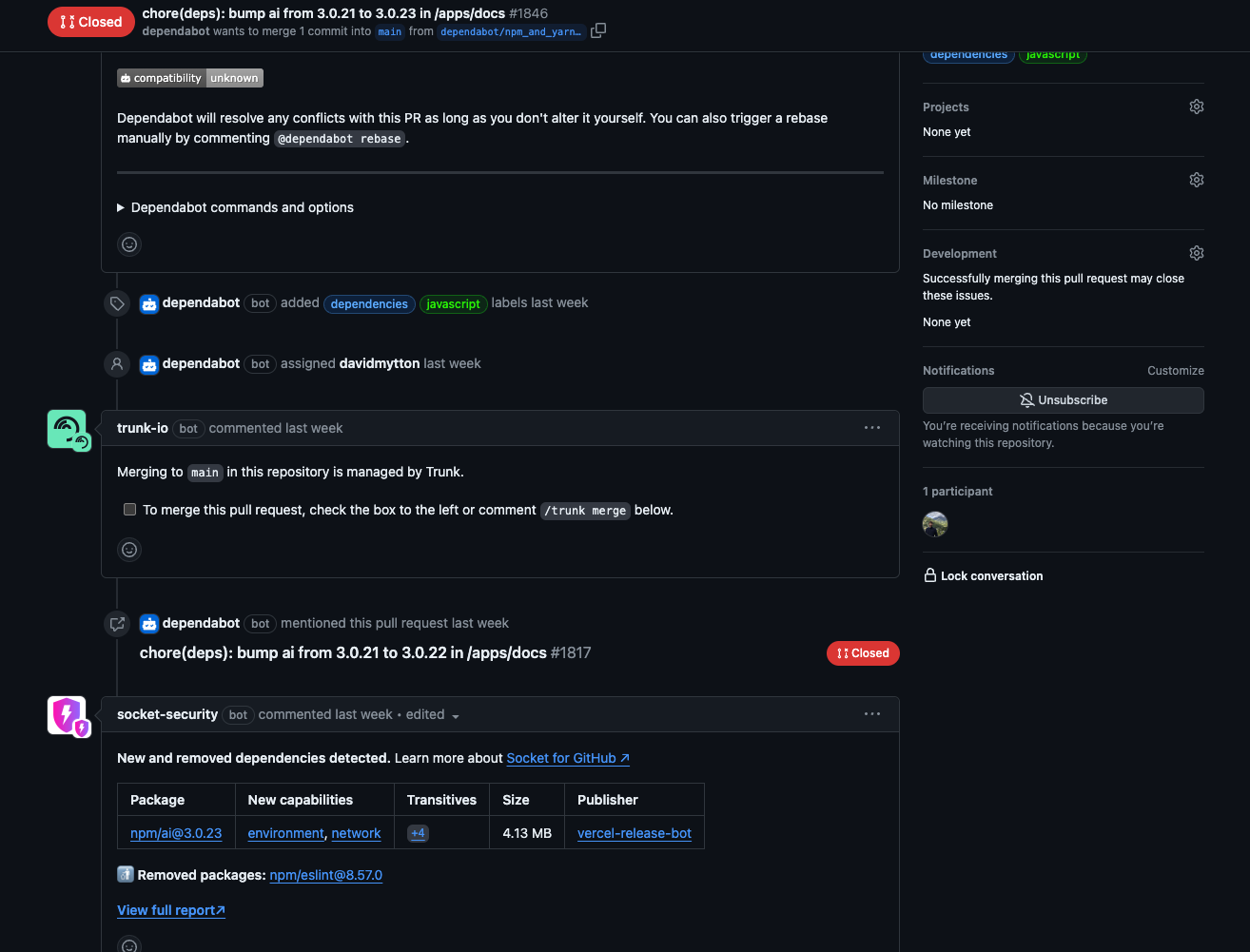

I recommend Dependabot and Socket to help you get timely updates and scan them for potential issues.

Everyone uses Alpine Linux for their container base image because it’s small and has a very limited surface area of default packages. However, it has some quirks you need to be aware of because it uses musl rather than glibc. For example, Go does not officially support it and there was a lengthy Hacker News discussion about the issues that can arise.

At Arcjet, we use wolfi-os because it offers a very minimal image with package inventory output (an SBOM) and operates on a rolling release basis. This was very helpful during the recent xz backdoor because we could quickly check the versions of installed packages and verify that we weren’t running sshd. They also quickly released a new version of wolfi-os with the updated packages. All we needed to do was trigger a new build to access them.

If you want an even more locked-down version then Distroless is a great option because it doesn’t even have a shell. This can be a problem if you want to be able to log in for debugging purposes, which can be useful locally, but usually isn’t needed in production. They provide a “debug” version which includes a busybox shell. However, Distroless is not a rolling distro and is based on Debian 12 or Debian 11 tracking upstream releases. This can pose a problem if you need the most up to date packages.

If an attacker managed to get your application to execute arbitrary code then they could download a reverse shell into your container and create a connection into your environment or exfiltrate files or other data. Starting your container with a read only filesystem helps mitigate risk by stopping code modification and preventing downloading additional files in the event of such a code execution vulnerability.

You can test this locally by running your Docker containers with the --read-only argument.

docker run -it --rm --read-only ubuntu

root@eb0661959807:/# mkdir test

mkdir: cannot create directory 'test': Read-only file systemIt’s good practice because it stops the attacker installing tools or tampering with files, but does restrict what you can do e.g. if you need temporary directories for sessions or file uploads. Consider how you could replace writing files locally with a different option e.g. uploading to object storage or using a database for sessions. This is some extra work, but moves the security responsibility elsewhere e.g. to AWS S3 for object storage.

In AWS EKS this is a configuration option and with Kubernetes you would set the podSpec.containers.securityContext.readOnlyFilesystem option to true for the container spec. You’ll need to do some more work though if you need a temporary filesystem.

Running as a nonroot user makes it more difficult to escalate privileges via remote code execution or a reverse shell. If an attacker can make your application execute code then it will inherit the permissions your code is running as. That means if your code runs as root then any attacker code will also run as root!

Docker containers run as root by default:

docker run -it --rm ubuntu

root@eb0661959807:/# whoami

rootContainers themselves are one layer of security because they are a more limited environment, but there are container escape vulnerabilities that could be exploited. And if you’re root then you can install whatever you like, such as cryptominers, opening the potential for being charged for extra resources by your cloud provider.

You can lock this down by setting the USER directive to a nonroot user inside the container. If you run your own container orchestration system i.e. rather than AWS EKS or GKE, then it’s also worth considering using Docker rootless mode or with Podman for running as nonroot outside the container. These are separate mitigations for different issues, so it’s worth understanding the differences.

For example, building a Distroless Node 20 image with a nonroot USER uses the nonroot tag to ensure the nonroot user is already available:

# Build image

FROM node:20 AS build-env

# Copy files in

ADD . /app

WORKDIR /app

# Install dependencies

RUN npm install --omit=dev

# Create the base image from Distroless

FROM gcr.io/distroless/nodejs20-debian12:nonroot

COPY --from=build-env /app /app

WORKDIR /app

EXPOSE 3000

# Switch to non-root user

USER nonroot

CMD ["index.js"]Dockerfile showing building a Distroless Node 20 image with a nonroot USER. Adapted from this example.

If you were using Alpine then you’d need to create the user first:

# Build image

FROM node:20 AS build-env

# Copy files in

ADD . /app

WORKDIR /app

# Install dependencies

RUN npm install --omit=dev

# Create the base image from Alpine

FROM node:20-alpine

# Create a non-root user

RUN addgroup -S nongroup && adduser -S -G nongroup nonroot

COPY --from=build-env /app /app

WORKDIR /app

EXPOSE 3000

# Switch to non-root user

USER nonroot

CMD ["index.js"]Dockerfile showing building an Alpine Node 20 image with a nonroot USER. Adapted from this example.

We don’t store secrets in our containers, instead using the platform functionality to access them. On AWS this means using the AWS SDK to load values from AWS Secrets Manager at initialization. On Fly.io we use their secrets storage system. We also have secrets scanning as part of our Trunk rules and use GitHub’s secrets protection features to avoid pushing secrets into Git.

That said, we still scan all our container images once they’re built and before they get pushed to the registry to ensure nothing accidentally gets baked into the image. See my previous blog post about secret scanning and Next.js - we do the same with our Go containers as well.

We also use Semgrep and Trunk in CI to highlight any security or linting issues which might have been missed by our development environment tooling. The configs are sync’d so the same checks run locally as a pre-commit hook. CI enforces this.

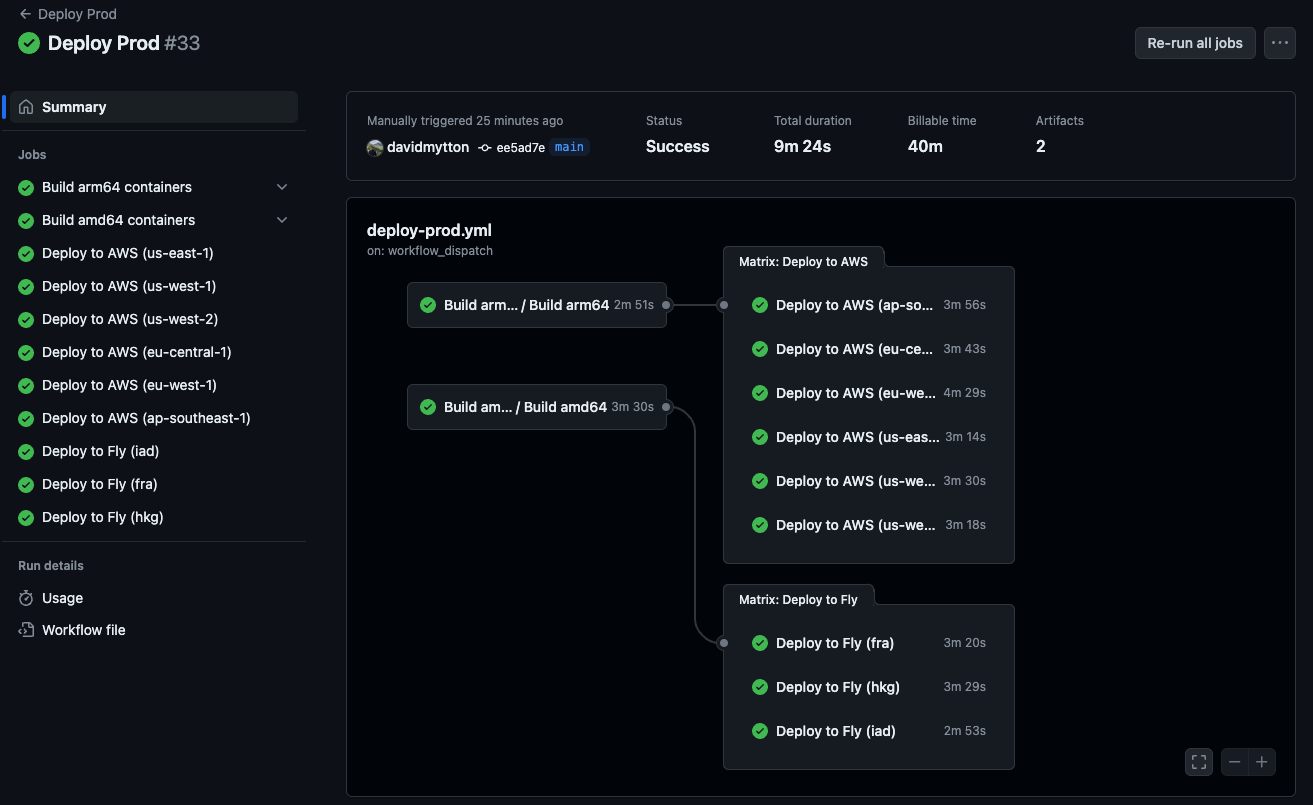

At Arcjet we use GitHub Actions to manage all our deployments. This provides us with several benefits;

These layers have a real impact. During the recent xz backdoor, the time we spent upfront on container hardening paid off. Our minimal base image meant we weren't vulnerable, and that was something we could quickly confirm. Even if we were, our read-only filesystems would've limited the attacker's options. We have other mitigations as well, such as not running with public internet access, but that’s a topic for another post!

Writing secure code is only half the battle. With these layers, you're making sure it reaches production safe and sound. Remember, no single defense is perfect – but a strong, multi-layered approach makes a massive difference. We didn't do this all at once either. It's a matter of continuous improvement!

Fly automatically builds and deploys your containerized app globally, but you still need to handle the application security.

Self-host apps securely with Coolify and Tailscale. This guide provides instructions for setup, deployment on a private Tailscale network, and public access for Next.js on Coolify.

A security checklist of 7 things to improve the security of your Next.js applications. Dependencies, data validation & sanitization, environment variables, code exposure, security headers, centralizing functions, getting help from your editor.

Get the full posts by email every week.