Running Wasm on the JVM

How to run Wasm in Java - how does Wasm interact with the JVM and what options are there for Java Wasm runtimes?

Lessons learned from running production Go services that call Wasm using Wazero: embedding Wasm binaries, optimizing startup times, pre-initialization, wasm-opt optimizations, and profiling.

The Arcjet SDK helps developers implement security features like bot detection, email validation, and sensitive data detection. It appears like any other JS SDK, but most of the core functionality is implemented in a WebAssembly component we bundle with the SDK.

We write everything in Rust and then compile it to Wasm, which has been a great technology to work with. It provides a secure sandbox with near-native speed which makes it perfect for security use cases where we need to analyze arbitrary HTTP requests.

Plus it’s cross-platform. Our JS SDK can be used on any platform (Node.js, Bun, Deno, Linux, Windows, macOS) and we’re working on SDKs for other languages, such as Python.

We also run Wasm on the server using Wazero for Go. I’ve written about how this works in the past and how it was an evolution from compiling Rust to FFI libraries called from Go. The same Wasm bundled with the SDK is also run server-side for several reasons: consistency across languages, eliminating code duplication, defense in depth, and as an SDK fallback. See the blog post for details.

In this post, I’ll be discussing the lessons learned from running production Go services that call Wasm using Wazero.

This blog post is also available as a CNCF talk on YouTube.

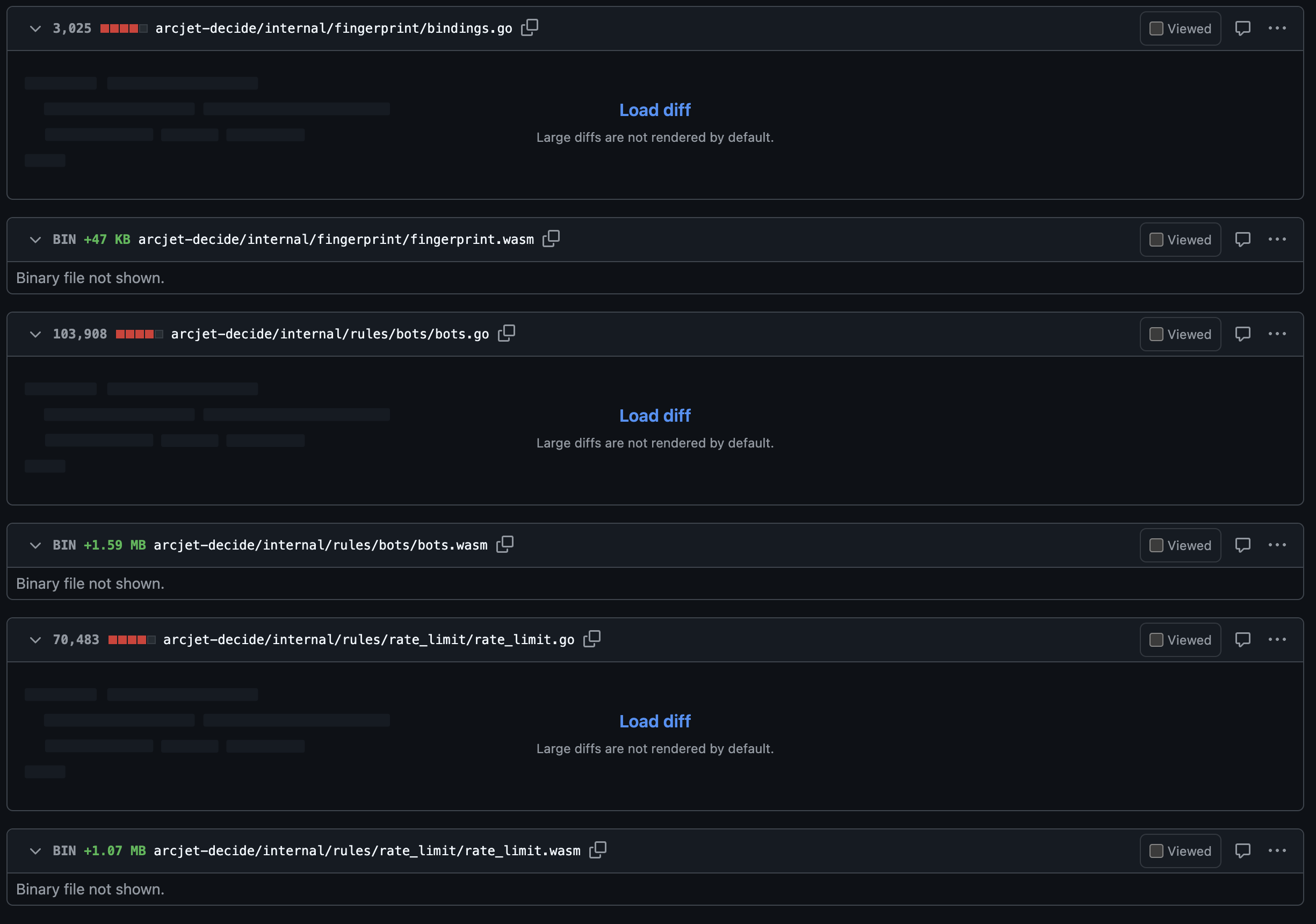

In our build process, we transform our Wasm definitions into native Go bindings, allowing us to call Wasm functions from Go. Initially, we embedded the Wasm binaries directly into our Go files as large hex-encoded byte arrays.

var wasmFileBotRawBytes = []byte{

0x00, 0x61, 0x73, 0x6d, // ...

}We believed that keeping the binaries self-contained within the bindings would simplify repository management and follow the convention of not committing binaries to source control. However, this approach led to several issues:

To address these problems, we switched to using go:embed (docs), which allowed us to embed the Wasm binary files efficiently:

import _ "embed"

//go:embed bot.wasm

var wasmFileBot []byte

...This change improved editor performance and made code reviews more manageable, as the binaries were no longer bloating the Go source files. Committing the Wasm binaries also proved to be a better practice in our case, despite conventional wisdom, because it enhanced the overall development experience.

On startup, we decode the WebAssembly binary from the embedded bytes and compile it to a CompiledModule. From the Wazero docs:

Compiler compiles WebAssembly modules into machine code ahead of time (AOT), during Runtime.CompileModule. This means your WebAssembly functions execute natively at runtime. Compiler is faster than Interpreter, often by order of magnitude (10x) or more.

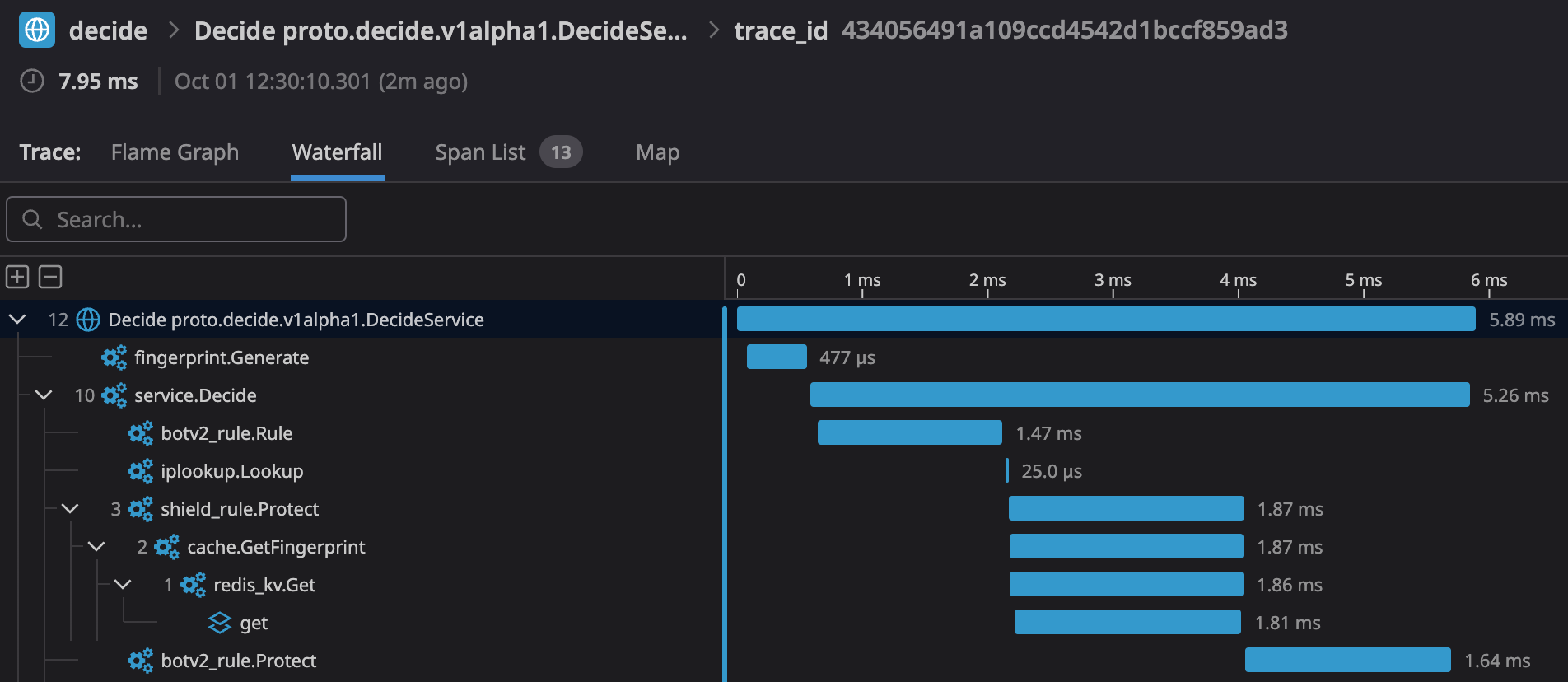

API performance is a key metric for us - our p50 is 10ms and we aim for a p99 of 30ms. This makes pre-compilation an important optimization because we don’t want to be recompiling the Wasm for every request.

When our Go server starts up, we execute the compile step for each of our Wasm components. This takes several seconds, which is quite slow, but is the tradeoff for runtime performance.

We also do this step as part of our tests, which are otherwise independent and isolated. The test setup function pre-compiles the Wasm module at the start of the test runner so it only happens once.

The Wasm module is loaded every time the Arcjet SDK client is created, such as on cold start in a serverless environment, so slow startup times are not acceptable.

To address this issue, we integrated Wizer into our build process. Wizer allows us to pre-initialize and precompile our Wasm modules ahead of time. Here’s how it works:

By doing this, we eliminate the need to compile the Wasm modules at runtime.

We run wasm-opt over our generated Wasm binaries to apply various optimizations as part of the build process. You can choose whether you want to optimize for binary size or performance, with each having tradeoffs vs the other.

For our SDKs, we want to balance both. We don’t want to ship huge SDKs and the startup time is important to minimize in serverless environments with cold starts. For long running servers like a classic Node.js Express server we can lean more towards performance vs binary size, but we still don’t want our users to experience slow startups. On our own servers, we can optimize heavily for performance.

There are lots of options to choose from, but for now we’ve settled on:

--converge which runs all the optimizations repeatedly until the program no longer changes.--flatten --rereloop flattens the internal representation and rewrites the control flow graph.-Oz applies size optimizations instead of speed. This should be tested to determine what tradeoffs make sense for different runtime assumptions. --gufa runs optimization for garbage collection.-Oz is specified again to clean up the internal representation.The main disadvantage to this approach is longer build times. Precompiling adds some time to our build process. However, this is a one-time cost per build and doesn’t impact runtime performance. Pre-compilation ensures that all instances start with optimized modules, providing consistent performance across deployments.

The final result is a build process involving the following steps:

wasm32-unknown-unknown target.wasm-optNone of the normal performance tracing tools we use can inspect the Wasm code from Go. We use OpenTelemetry instrumentation and all we get to see is the top level Go function call.

A workaround is to scatter new spans throughout the Go bindings, but still prevents detailed tracing of what’s happening inside Wasm - all you can see is the entry and exit timings.

wzprof looks like a promising profiling solution built on top of wazero. So far our performance is well within our latency goals, so we’ve not needed to explore it yet.

fingerprint.Generate and botv2_rule.Protect.By rethinking how we embed Wasm modules we improved our development workflow and code maintainability. Optimizing startup times through pre-compilation with Wizer and applying performance enhancements with wasm-opt allowed us to meet our stringent latency goals.

There are lots of exciting developments within the Wasm community and these tools have helped us build a production-quality service. Using Wasm was where we chose to spend most of our innovation tokens, so it’s been great to see that bet pay off.

How to run Wasm in Java - how does Wasm interact with the JVM and what options are there for Java Wasm runtimes?

Idiomatic code generation for Go using the Wasm Component Model. Compiling for different languages always has tradeoffs, which is why using standards helps everyone.

Server-side WebAssembly: Unifying cross-language logic for high performance and data privacy. Learn how Arcjet leverages WASM for local-first processing.

Get the full posts by email every week.