Detecting client IPs on Firebase

How Arcjet detects the real client IP in Firebase deployments, bypassing X-Forwarded-For issues by utilizing a custom Firebase header.

How Arcjet uses AWS Global Accelerator to route API requests via low-latency private networking to meet our end-to-end p50 latency SLA of 20–30ms.

Arcjet performs real-time security analysis in the critical path of API and authentication flows. That means latency isn’t just a consideration - it’s a core design constraint.

To meet our end-to-end p50 latency SLA of 20–30ms: we deploy globally, use persistent HTTP/2 connections, and rely on AWS's network to ensure routing to the nearest healthy region.

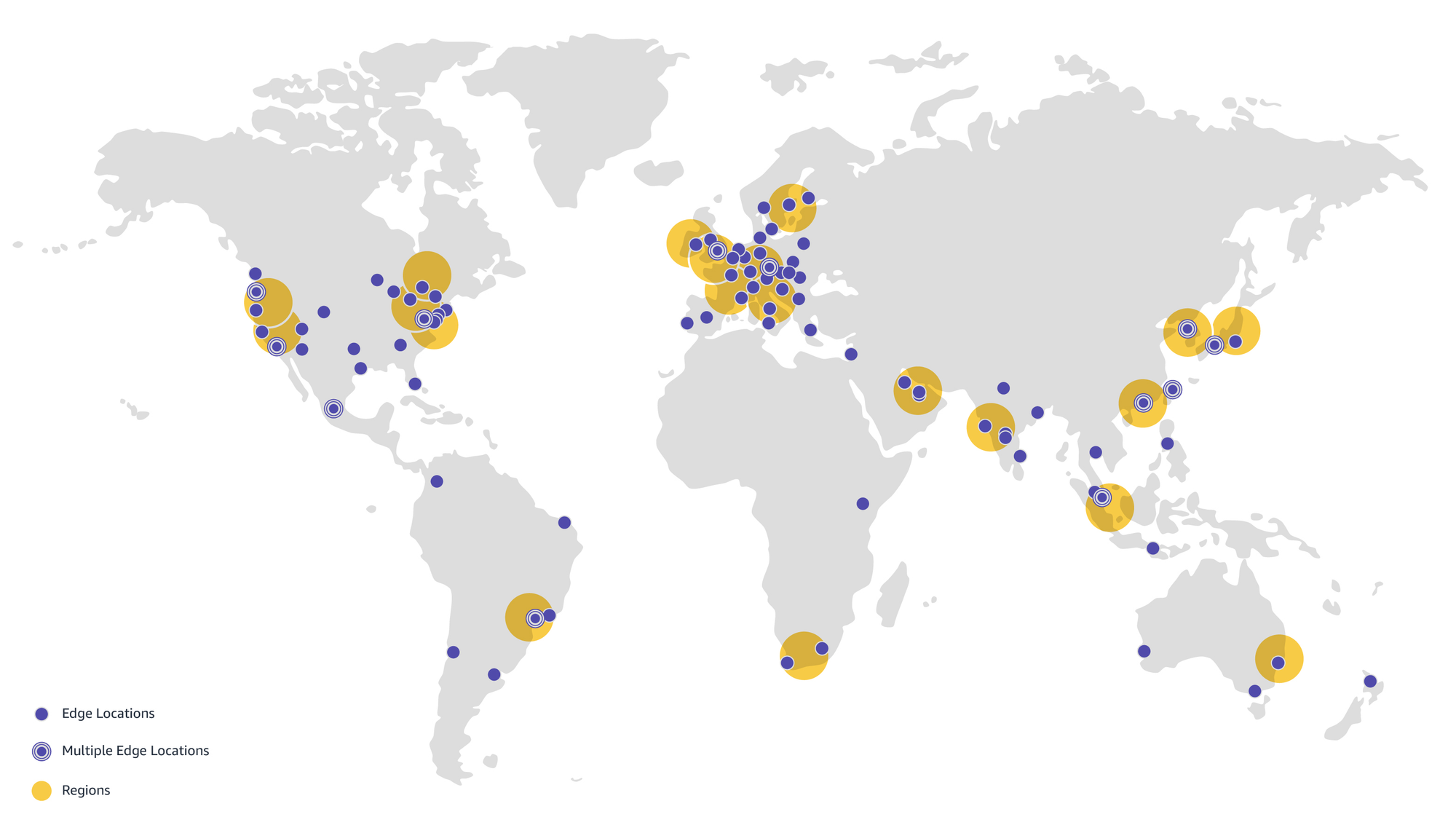

A core component of our architecture is AWS Global Accelerator. This service routes traffic through a set of Anycast IPs via distributed AWS edge locations (points of presence) to the closest healthy AWS region, utilizing AWS's private global network for more stable and lower-latency pathways compared to the public internet.

This post explains the details of how we use AWS Global Accelerator and other AWS services to achieve our SLA targets. While our context is delivering a low-overhead security product for developers, the principles and benefits discussed are applicable to any mission-critical, latency-sensitive global application.

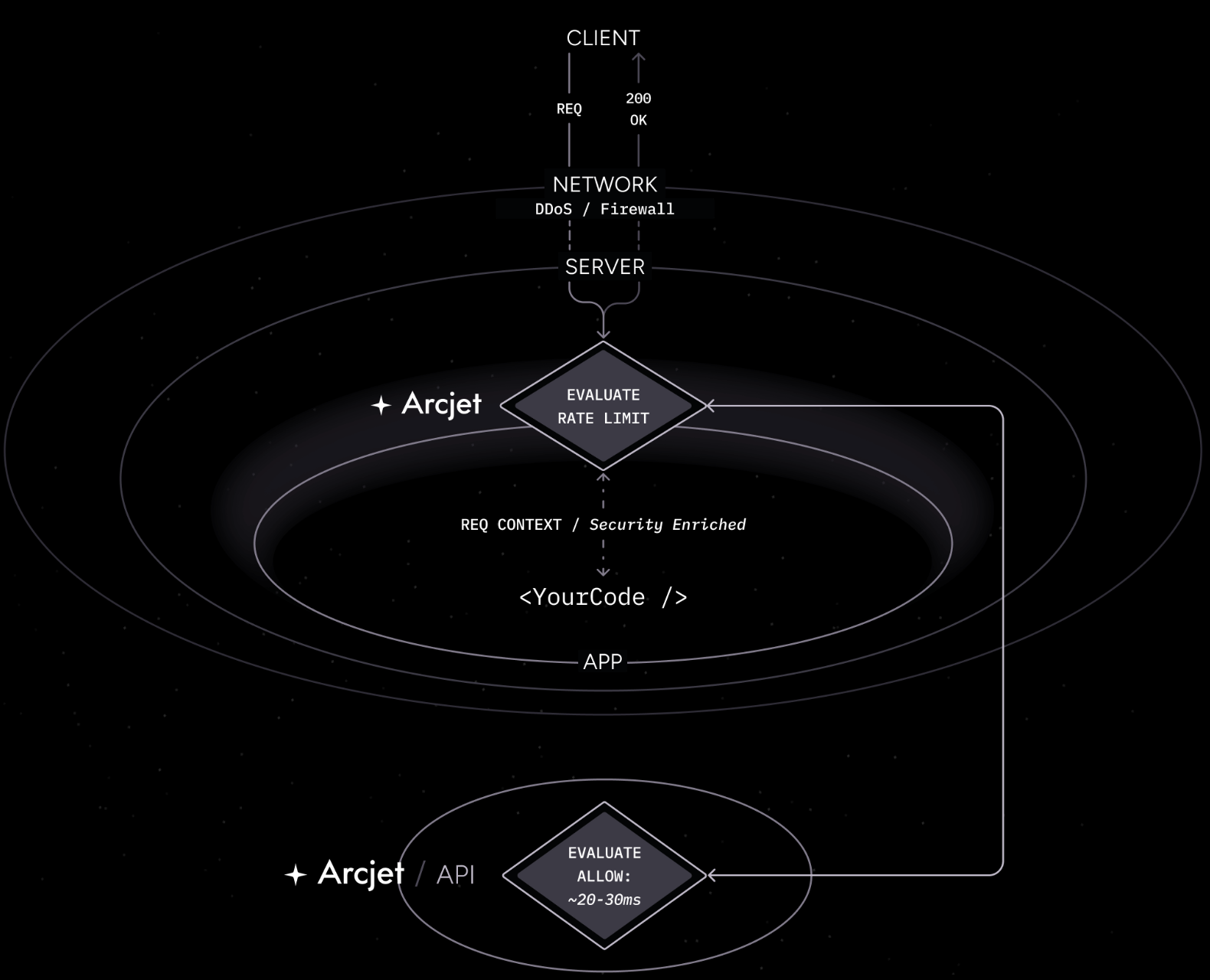

Arcjet is delivered as an SDK so it can be tightly integrated into the logic of the application and benefit from the full request context. Security rules can be adjusted dynamically based on user and session characteristics, and the results can be incorporated into how the application behaves.

Everything is downstream of latency. That’s why Arcjet takes a local-first approach: rules are evaluated in-process via a WebAssembly module bundled in the SDK. Many decisions can complete within 1-2ms, but others require a network call, such as when our dynamic IP reputation database is used as one of the security signals.

In cases where our cloud decision API is involved, we set ourselves a p50 response time goal of 20ms which leaves 5-10ms for the network round trip so that we can hit our end to end latency goal of 20-30ms.

This goal applies globally. Developers deploy their applications everywhere, so centralizing our API is not an option.

Arcjet’s cloud decision API is written in Go and provides both JSON and gRPC interfaces to support different environments, with gRPC preferred due to the more optimized Protocol Buffers message format.

Our cloud decision API is containerized and deployed across multiple availability zones within each AWS region using AWS Elastic Kubernetes Service (EKS), fronted by an AWS Application Load Balancer (ALB). The ALB distributes incoming API traffic across our container instances; its cross-zone load balancing capability ensures that if one availability zone experiences an issue, traffic is automatically routed to instances in the other healthy zones within that region, enhancing resilience.

From the developer’s perspective, Arcjet is a single endpoint. Behind the scenes, Global Accelerator ensures each request is routed to the closest healthy AWS region using latency-based routing and active health checks. This happens automatically - no configuration or region awareness required from the developer.

Setting up a new connection is often the slowest part of communicating with our API. Establishing a TCP connection and performing a TLS handshake takes multiple round trips, adding many milliseconds to each new request. This can quickly eat into our 5-10ms network round trip budget and is particularly problematic in serverless environments where cold starts are common.

To mitigate this our SDK establishes a persistent HTTP/2 connection to our API, allowing multiple requests to be multiplexed over a single, long-lived connection. Global Accelerator helps minimize the round trip time because the initial TLS handshake can be completed by the closest network edge, which is usually much closer than the AWS region serving the request.

The network path between the edge location and the AWS region uses the AWS global network, which is much more optimized compared to routing over the public internet. Global Accelerator helps eliminate network jitter and unpredictable routing by using AWS’s private backbone between edge locations and regions. This stability is critical for maintaining consistent performance, especially in bursty serverless environments.

We run in >10 AWS regions, launching more based on customer demand. Each regional deployment is completely independent, a key design choice for maximizing availability and fault isolation, orchestrated seamlessly by AWS Global Accelerator's intelligent routing. If traffic is re-routed then this happens within AWS’s network without requiring our SDK client to reconnect and avoiding a cold start.

We measure both internal processing latency and end-to-end cold start latency from the SDK’s perspective. Over the last 7 days, the median internal API response time across all regions was 12ms (p95: 20ms).

In a full cold start scenario where a new connection is initiated by the Arcjet SDK, we recorded a p50 of 25ms from request initiation to response delivery - including 2ms for TCP and 6ms for TLS handshakes.

Low latency isn’t just a feature - it’s fundamental to Arcjet’s design for real-time application security. By leveraging AWS’s global edge network and services like Global Accelerator, we offload the hardest parts of distributed networking and stay focused on building developer-first security features.

If you're building latency-sensitive APIs, especially in a multi-region or serverless world, Global Accelerator can probably help.

How Arcjet detects the real client IP in Firebase deployments, bypassing X-Forwarded-For issues by utilizing a custom Firebase header.

How can you tell if a traffic spike is an attack or just your largest customer using your service?

How we utilize AWS Route53, anycast, and multi-cloud to minimize latency and enhance performance for both servers and human users.

Get the full posts by email every week.