Introducing the Arcjet Python SDK beta

The Arcjet Python SDK allows you to implement rate limiting, bot detection, email validation, and signup spam prevention in FastAPI and Flask style applications.

New bot protection functionality with detection of AI scrapers, bot categories, and an open source list of bot identifiers.

Arcjet’s framework native SDK helps developers implement security features directly in code. Bot protection is our most popular feature so far, which makes sense given the concern around AI services scraping content from websites.

Your site has probably already been used as part of the training datasets for many existing large language models, but that doesn’t mean you need to continue to allow them!

And if you’re not that bothered about AI, maybe you just want to clean up your website analytics reports, stop form submission spam, and prevent other types of content scraping. That's what bot detection is for.

Today we’re announcing new bot detection functionality that makes it easier to protect your site from specific categories of bot, including AI scrapers. We're also announcing our open source bot list that powers the core of the bot detection built into our SDK.

Each identified bot is grouped into a top level category that allows you to allow or deny multiple bots in one go. Whether that's internet achivers, social preview links or monitoring agents, you can easily allow or deny whole groups from our bot list.

For example, if you wanted to allow all search engines, social link preview bots, and curl requests then you can add the CATEGORY:PREVIEW and CATEGORY:SEARCH_ENGINE categories to your rules, plus the specific identifier for curl:

const aj = arcjet({

key: process.env.ARCJET_KEY!,

rules: [

detectBot({

mode: "LIVE",

// configured with a list of bots to allow from

// https://arcjet.com/bot-list - all other detected bots will be blocked

allow: [

"CATEGORY:PREVIEW",

"CATEGORY:SEARCH_ENGINE",

"CURL", // allows the default user-agent of the `curl` tool

],

}),

],

});An example of defining bot detection rules in JS. Bots identified in the link preview and search engine categories, plus requests from the curl command line tool, will be allowed. All other bots will be denied.

Using CATEGORY:AI you can create a rule to detect AI scrapers and customize the list to allow specific ones.

For example, if you wanted to block all AI bots, but make an exception for Perplexity (because it’s more like a search engine), you could set up a rule like this:

import arcjet, { botCategories, detectBot } from "@arcjet/next";

const aj = arcjet({

key: process.env.ARCJET_KEY!,

rules: [

detectBot({

mode: "LIVE",

// configured with a list of bots to allow from

// https://arcjet.com/bot-list - all other detected bots will be blocked

deny: [

// filter a category to remove individual bots from our provided lists

...botCategories["CATEGORY:AI"].filter(

(bot) => bot !== "PERPLEXITY_CRAWLER",

),

],

}),

],

});An example of defining AI bot detection rules for Next.js, with an exception to allow the Perplexity crawler. The Arcjet SDK supports many different frameworks and runtimes.

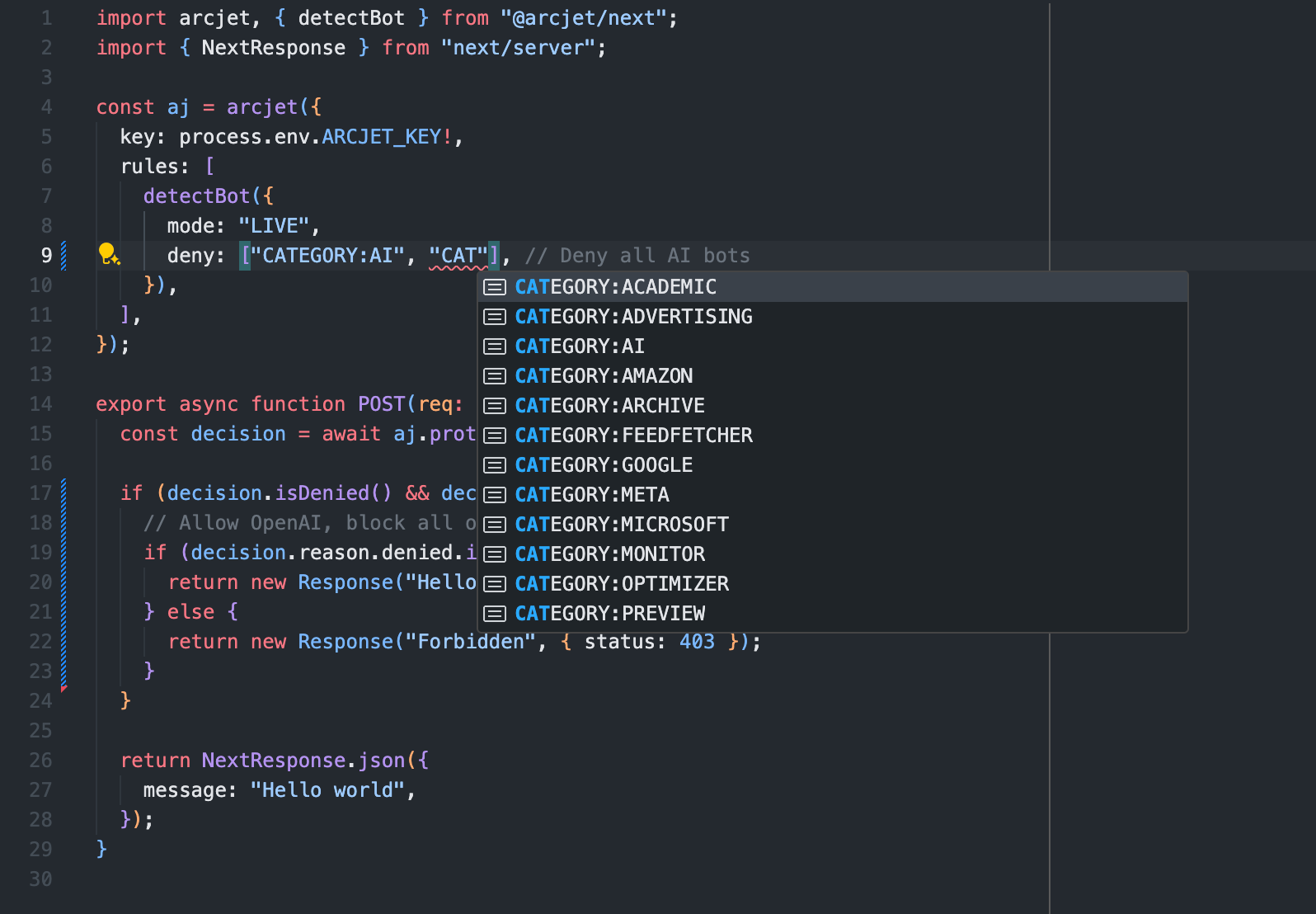

When returning a decision, you can inspect both the category match as well as the specific bot identifier that was detected. This means you can apply different results to different bots.

For example, let’s say you have a commercial agreement with OpenAI to use your content so you want to allow their crawler, but deny all others. You could customize the response logic as follows:

import arcjet, { detectBot } from "@arcjet/next";

import { NextResponse } from "next/server";

const aj = arcjet({

key: process.env.ARCJET_KEY!,

rules: [

detectBot({

mode: "LIVE",

deny: ["CATEGORY:AI"], // Deny all AI bots

}),

],

});

export async function GET(req: Request) {

const decision = await aj.protect(req);

if (decision.isDenied() && decision.reason.isBot()) {

// Allow OpenAI, block all others

if (decision.reason.denied.includes("OPENAI_CRAWLER")) {

return new Response("Hello OpenAI");

} else {

return new Response("Forbidden", { status: 403 });

}

}

return NextResponse.json({

message: "Hello world",

});

}An example of defining AI bot detection rules for Next.js, with a custom response for OpenAI. This could be customized with a different API response or a different action taken - it's just code, so you can define your own logic. The Arcjet SDK supports many different frameworks and runtimes.

The great thing about defining these rules in code is that you can also test them locally. Use curl with a different user agent to check the response is as expected:

curl -A "Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; GPTBot/1.0; +https://openai.com/gptbot)" http://localhost:3000Local testing allows you to confirm that bots get the desired response and that you don’t accidentally block legitimate users. This can be incorporated into automated integration tests to run as part of your test suite. See our Testing docs for more information.

Understanding which bots and AI scrapers can be detected and how they’re identified is important to create accurate rules. You want to ensure that only the bots you want to deny access are actually blocked.

We’re now publishing our list of bot identifiers as an open source repository, based on the excellent work in Martin Monperrus’s crawler-user-agents repository.

We’ve contributed new bot identifiers and some general tidying upstream whilst forking it to add our own identifiers. These additions are used in the Arcjet SDK to provide auto-complete and type checking for valid bot names.

This is part of our basic bot detection functionality which uses the user agent to identify well behaving bots. Contributions are welcome!

This new functionality is available to all users for free today! Sign up now to get going with Arcjet’s security SDK for developers.

More advanced bot detection, which can identify badly behaving bots based on additional metadata such as IP address analysis, is available as part of our paid plans. Find out more about our pricing and learn more about bot detection in our docs.

The Arcjet Python SDK allows you to implement rate limiting, bot detection, email validation, and signup spam prevention in FastAPI and Flask style applications.

I recently joined James Governor at RedMonk to talk about why security tooling still feels years behind the rest of the developer ecosystem and

Announcing Arcjet’s local AI security model, an opt-in AI security layer that runs expert security analysis for every request entirely in your environment, alongside our Series A funding.

Get the full posts by email every week.