Building a minimalist web server using the Go standard library + Tailwind CSS

How to build a website with dynamic HTML and a modern UI using Tailwind CSS using only the Go standard library. Embrace the minimalist web server!

Using Go + Gin to reimplement our backend REST API. How we built the golden API: performance & scalability, comprehensive docs, security, authentication, and testability.

At some point, every company reaches a crossroads where they need to stop and reassess the tools they've been using. For us, that moment came when we realized that the API powering our web dashboard had become unmanageable, hard to test, and didn't meet the standards we set for our codebase.

Arcjet is primarily a security as code SDK to help developers implement security functionality like bot detection, email validation, and PII detection. This communicates with our high-performance, low-latency decision gRPC API.

Our web dashboard uses a separate REST API primarily to manage site connections and review processed requests analytics, however this also includes signing up new users and managing their account, which means it’s still an important part of the product.

So, we decided to take on the challenge of rebuilding our API from the ground up—this time with a focus on maintainability, performance, and scalability. However, we didn’t want to embark on a huge rewrite project - that never works out well - instead, we decided to build a new foundation and then start with a single API endpoint.

In this post, I’ll discuss how we approached this.

When speed was our top priority, Next.js provided the most convenient solution for building API endpoints that our frontend could consume. It gave us seamless full-stack development within a single codebase, and we didn’t have to worry too much about infrastructure because we deployed on Vercel.

Our focus was on our security as code SDK and low-latency decision API so for the frontend dashboard this stack allowed us to quickly prototype features with little friction.

Our Stack: Next.js, DrizzleORM, useSWR, NextAuth

However, as our product evolved, we found that combining all our API endpoints and frontend code in the same project led to a tangled mess.

Testing our API became cumbersome (and is very difficult to do with Next.js anyway), and we needed a system that could handle both internal and external consumption. As we integrated with more platforms (like Vercel, Fly.io, and Netlify), we realized that speed of development alone wasn't enough. We needed a more robust solution.

As part of this project we also wanted to address a lingering security concern about how Vercel requires you to expose your database publicly. Unless you pay for their enterprise “secure compute”, connecting to a remote database requires it to have a public endpoint. We prefer to lock down our database so it can only be accessed via a private network. Defense in depth is important and this would be another layer of protection.

This resulted in us deciding to uncouple the frontend UI from the backend API.

What is "The Golden API"? It’s not a specific technology or framework—it’s more of an ideal set of principles that define a well-built API. While developers may have their own preferences for languages and frameworks, there are certain concepts valid across tech stacks that most can agree on for building a high-quality API.

We already have experience in delivering high-performance APIs .Our Decision API is deployed close to our customers, uses Kubernetes to scale dynamically, and is optimized for low-latency responses.

We considered serverless environments and other providers, but with our existing k8s clusters already operating it made most sense to reuse the infrastructure in place: deployments through Octopus Deploy, monitoring through Grafana + Jaeger, Loki, Prometheus, etc.

After a short internal Rust vs Go bake off, we chose Go for its simplicity, speed, and how well it achieves its original goals of excellent support for building scalable network services. We also already use it for the Decision API and understand how to operate Go APIs, which finalized the decision for us.

Switching the backend API to Go was straightforward thanks to its simplicity and the availability of great tools. But there was one catch: we were keeping the Next.js frontend and didn’t want to manually write TypeScript types or maintain separate documentation for our new API.

Enter OpenAPI—a perfect fit for our needs. OpenAPI allows us to define a contract between the frontend and backend, while also serving as our documentation. This solves the problem of maintaining a schema for both sides of the app.

Integrating authentication in Go wasn’t too difficult, thanks to NextAuth being relatively straightforward to mimic on the backend. NextAuth (now Auth.js) has APIs available to verify a session.

This meant we could have a TypeScript client in the frontend generated from our OpenAPI spec making fetch calls to the backend API. The credentials are automatically included in the fetch call and the backend can verify the session with NextAuth.

Writing any kind of tests in Go is very easy and there are plenty of examples out there, covering the topic of testing HTTP handlers.

It’s also much easier to write tests for the new Go API endpoints compared to the Next.js API, particularly because we want to test authenticated state and real database calls. We were able to easily write tests for the Gin router and spin up real integration tests against our Postgres database using Testcontainers.

We started by writing the OpenAPI 3.0 specification for our API. An OpenAPI-first approach promotes designing the API contract before implementation, ensuring that all stakeholders (developers, product managers, and clients) agree on the API's behavior and structure before any code is written. It encourages careful planning and results in a well-thought-out API design that is consistent and adheres to established best practices. These are the reasons why we chose to write the spec first and generate the code from it, as opposed to the other way around.

My tool of choice for this was API Fiddle, which helps you quickly draft and test OpenAPI specs. However, API Fiddle only supports OpenAPI 3.1 (which we couldn’t use because many libraries hadn’t adopted it), so we stuck with version 3.0 and wrote the spec by hand.

Here’s an example of what the spec for our API looked like:

openapi: 3.0.0

info:

title: Arcjet Sites API

description: A CRUD API to manage sites.

version: 1.0.0

servers:

- url: <https://api.arcjet.com/v1>

description: Base URL for all API operations

paths:

/teams/{teamId}/sites:

get:

operationId: GetTeamSites

summary: Get a list of sites for a team

description: Returns a list of all Sites associated with a given Team.

parameters:

- name: teamId

in: path

required: true

description: The ID of the team

schema:

type: string

responses:

"200":

description: A list of sites

content:

application/json:

schema:

type: array

items:

$ref: "#/components/schemas/Site"

default:

description: unexpected error

content:

application/json:

schema:

$ref: "#/components/schemas/Error"

components:

schemas:

Site:

type: object

properties:

id:

type: string

description: The ID of the site

name:

type: string

description: The name of the site

teamId:

type: string

description: The ID of the team this site belongs to

createdAt:

type: string

format: date-time

description: The timestamp when the site was created

updatedAt:

type: string

format: date-time

description: The timestamp when the site was last updated

deletedAt:

type: string

format: date-time

nullable: true

description: The timestamp when the site was deleted (if applicable)

Error:

required:

- code

- message

- details

properties:

code:

type: integer

format: int32

description: Error code

message:

type: string

description: Error message

details:

type: string

description: Details that can help resolve the issueWith the OpenAPI spec in place, we used OAPI-codegen, a tool that automatically generates Go code from the OpenAPI specification. It generates all the necessary types, handlers, and error handling structures, making the development process much smoother.

//go:generate go run github.com/oapi-codegen/oapi-codegen/v2/cmd/oapi-codegen --config=config.yaml ../../api.yamlThe output was a set of Go files, one containing the server skeleton and another with the handler implementations. Here's an example of a Go type generated for the Site object:

// Site defines model for Site.

type Site struct {

// CreatedAt The timestamp when the site was created

CreatedAt *time.Time `json:"createdAt,omitempty"`

// DeletedAt The timestamp when the site was deleted (if applicable)

DeletedAt *time.Time `json:"deletedAt"`

// Id The ID of the site

Id *string `json:"id,omitempty"`

// Name The name of the site

Name *string `json:"name,omitempty"`

// TeamId The ID of the team this site belongs to

TeamId *string `json:"teamId,omitempty"`

// UpdatedAt The timestamp when the site was last updated

UpdatedAt *time.Time `json:"updatedAt,omitempty"`

}With the generated code in place, we were able to implement the API handler logic, like this:

func (s Server) GetTeamSites(w http.ResponseWriter, r *http.Request, teamId string) {

ctx := r.Context()

// Check user has permission to access team resources

isAllowed, err := s.userIsAllowed(ctx, teamId)

if err != nil {

slog.ErrorContext(

ctx,

"failed to check permissions",

slogext.Err("err", err),

slog.String("teamId", teamId),

)

SendInternalServerError(ctx, w)

return

}

if !isAllowed {

SendForbidden(ctx, w)

return

}

// Retrieve sites from database

sites, err := s.repo.GetSitesForTeam(ctx, teamId)

if err != nil {

slog.ErrorContext(

ctx,

"list sites for team query returned an error",

slogext.Err("err", err),

slog.String("teamId", teamId),

)

SendInternalServerError(ctx, w)

return

}

SendOk(ctx, w, sites)

}Drizzle is a great ORM for JS projects and we’d use it again, but moving the database code out of Next.js meant that we needed something similar for Go.

We chose GORM as our ORM and used the Repository Pattern to abstract database interactions. This allowed us to write clean, testable database queries.

type ApiRepo interface {

GetSitesForTeam(ctx context.Context, teamId string) ([]Site, error)

}

type apiRepo struct {

db *gorm.DB

}

func (r apiRepo) GetSitesForTeam(ctx context.Context, teamId string) ([]Site, error) {

var sites []Site

result := r.db.WithContext(ctx).Where("team_id = ?", teamId).Find(&sites)

if result.Error != nil {

return nil, ErrorNotFound

}

return sites, nil

}Testing is critical for us. We wanted to ensure that all database calls were properly tested, so we used Testcontainers to spin up a real database for our tests, closely mirroring our production setup.

type ApiRepoTestSuite struct {

suite.Suite

container *postgres.PostgresContainer

}

func (suite *ApiRepoTestSuite) SetupSuite() {

container, err := postgres.Run(suite.Context(), "postgres:16", ...)

require.NoError(suite.T(), err)

suite.container = container

}After setting up the test environment, we tested all CRUD operations as we would in production, ensuring that our code behaves correctly.

func (suite *ApiRepoTestSuite) Test_CRUD() {

// Connect to the container DB

db, err := gorm.Open(gormpg.Open(suite.ConnectionString()), &gorm.Config{})

// Create the subject we're testing

testRepo, err := NewApiRepo(db)

ctx := context.Background()

// Create site

siteName := "Test Site"

teamId := "teamid"

site, err := testRepo.CreateSite(ctx, siteName, teamId)

require.NoError(suite.T(), err)

//...

// Update site

updatedSiteName := "Updated Test Site"

updatedSite, err := testRepo.UpdateSite(ctx, site.ID, updatedSiteName)

require.NoError(suite.T(), err)

//...

// Get site

getSite, err := testRepo.GetSiteById(ctx, updatedSite.ID)

require.NoError(suite.T(), err)

//...

// Delete site

deleteSite, err := testRepo.DeleteSite(ctx, updatedSite.ID)

require.NoError(suite.T(), err)

//...

// Get deleted site - should not return records

getDeletedSite, err := testRepo.GetSiteById(ctx, updatedSite.ID)

require.Error(suite.T(), err)

//...

}For testing our API handlers, we used Go's httptest package and mocked the database interactions using Mockery. This allowed us to focus on testing the API logic without worrying about database issues.

func (suite *ApiTestSuite) SetupTest() {

testRepo := repo.NewMockApiRepo(suite.T())

suite.testRepo = testRepo

testServer := NewServer(testRepo)

suite.testServer = testServer

}

func (suite *ApiTestSuite) Test_CreateSite() {

siteName := "Test Site Name"

teamId := "teamid"

// Setup

suite.testRepo.On("CreateSite", mock.Anything, siteName, teamId).

Return(&repo.Site{

...

}, nil)

// Where the response from the request will be written to

w := httptest.NewRecorder()

requestBody, err := json.Marshal(&NewSite{

Name: siteName,

})

require.NoError(suite.T(), err)

request := httptest.NewRequest(

http.MethodPost,

fmt.Sprintf("/teams/%s/sites", teamId),

strings.NewReader(string(requestBody)))

// Run

suite.testServer.CreateSite(w, request, teamId)

// Assert

body := w.Body.String()

require.Equal()

}Once our API was tested and deployed, we turned our attention to the frontend.

Our previous API calls were made using Next.js’ recommended fetch API with caching built in. For more dynamic views, some components used SWR on top of fetch so we could get type-safe, auto-reloading data fetching calls.

To consume the API on the frontend, we used the openapi-typescript library, which generates TypeScript types based on our OpenAPI schema. This made it easy to integrate the backend with our frontend without having to manually sync data models. This has Tanstack Query built in, which uses fetch under the hood, but also syncs to our schema.

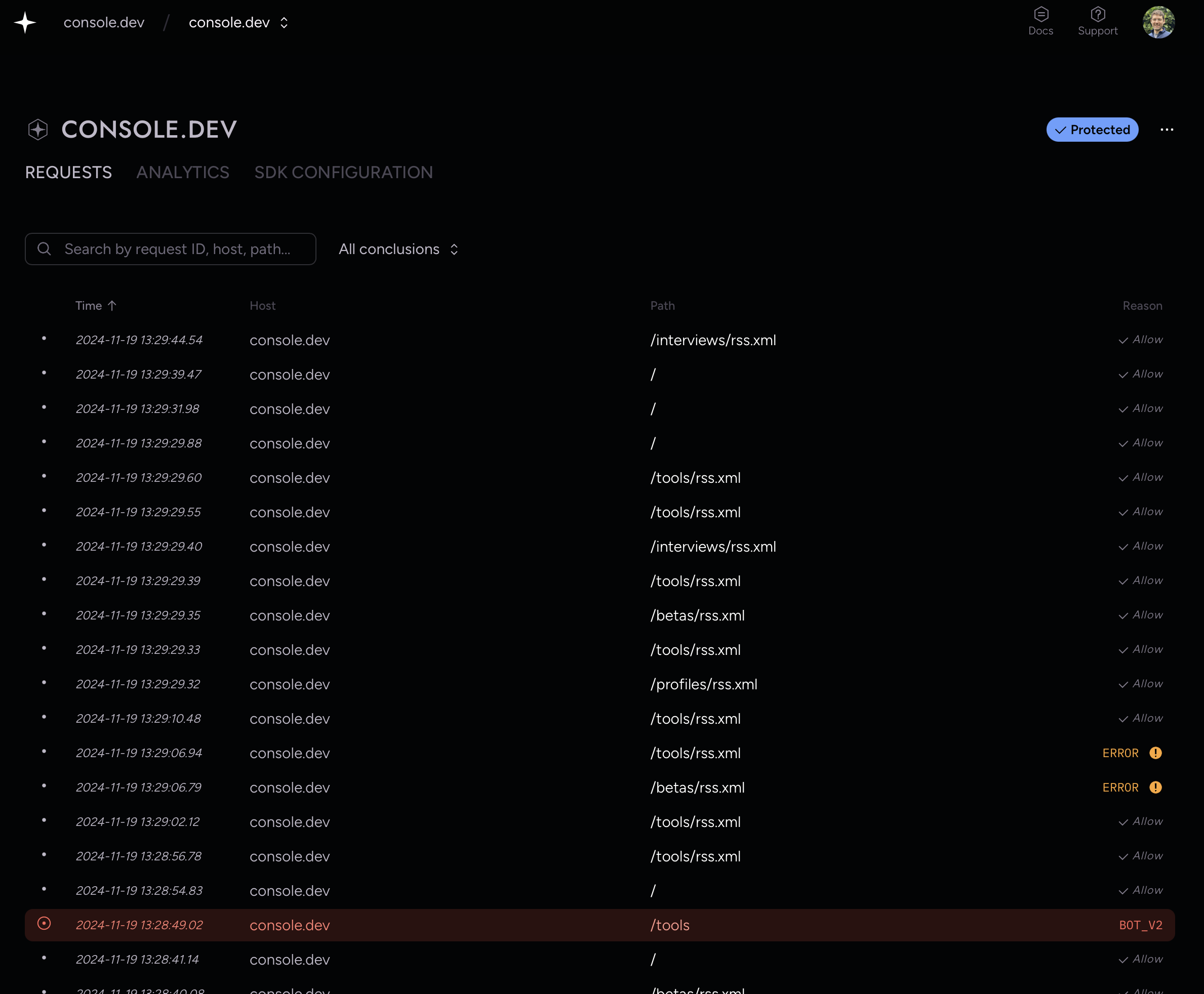

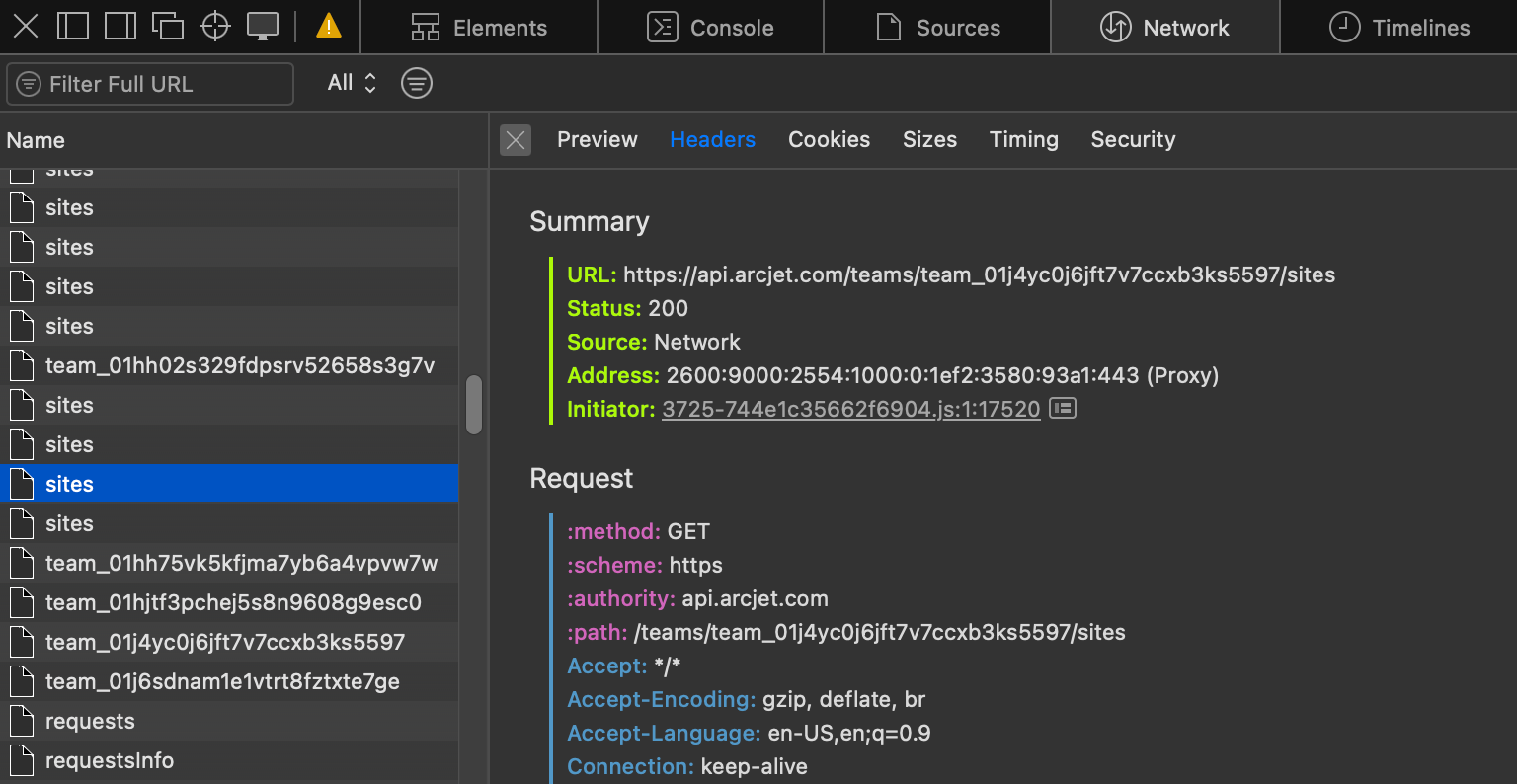

We're gradually migrating the API endpoints over to the new Go server and making small improvements along the way. If you open your browser inspector you'll see those new requests going to api.arcjet.com

So, did we achieve the elusive Golden API? Let’s check the box:

We went further with:

In the end, we’re happy with the results. Our API is faster, more secure, and better tested. The transition to Go was worth it and we’re now better positioned to scale and maintain our API as our product grows.

How to build a website with dynamic HTML and a modern UI using Tailwind CSS using only the Go standard library. Embrace the minimalist web server!

Idiomatic code generation for Go using the Wasm Component Model. Compiling for different languages always has tradeoffs, which is why using standards helps everyone.

Lessons learned from running production Go services that call Wasm using Wazero: embedding Wasm binaries, optimizing startup times, pre-initialization, wasm-opt optimizations, and profiling.

Get the full posts by email every week.